Entropy formula data science ~ Entropy is a thermodynamic function that we use to measure uncertainty or disorder of a system. Red red blue. Indeed lately has been hunted by users around us, maybe one of you personally. Individuals are now accustomed to using the net in gadgets to view image and video information for inspiration, and according to the title of this article I will talk about about Entropy Formula Data Science The most commonly used form is called Shannons entropy.

Source Image @ towardsdatascience.com

Entropy How Decision Trees Make Decisions By Sam T Towards Data Science

Low entropy represents that data have less variance with each other. Px is a probability distribution and therefore the values must range between 0 and 1. Your Entropy formula data science pictures are available in this site. Entropy formula data science are a topic that has been hunted for and liked by netizens now. You can Get or bookmark the Entropy formula data science files here

Entropy formula data science - 04 1 ln. N Total no 5. Now we know how to measure disorder. You add the entropies of the two children weighted by the proportion of examples from the parent node that ended up at that child.

2 Entropy and irreversibility 3 3 Boltzmanns entropy expression 6 4 Shannons entropy and information theory 6 5 Entropy of ideal gas 10 In this lecture we will rst discuss the relation between entropy and irreversibility. Also scientists have concluded that in a spontaneous process the entropy of process must increase. Ive developed an algorithm to define conditional entropy for feature selection in text classification. Bigger is the entropy more is the event unpredicatble.

Then we will derive the entropy formula for ideal gas SNVE Nk B ln V N 4ˇmE 3Nh2 32. For a detailed calculation of entropy with an example you can refer to this. Consider the formula for Entropy. Reason for negative sign.

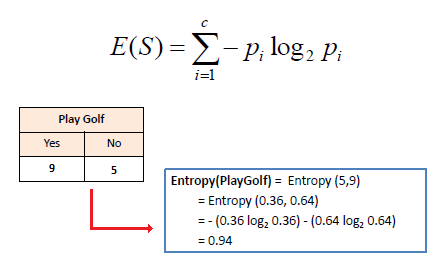

There are several different equations for entropy. High entropy represents that data have more variance with each other. The author mentions that Conditional Entropy values are between 0 log number of classes in which my case is 0 06931472. ES sum_limitsi1n-p_ilog_2p_i Expanding that summation for the four concept decision attributes for your problem gives ES -p_cinemalog_2leftp_cinemaright - p_tennislog_2leftp_tennisright - p_stayinlog_2leftp_stayinright - p_shoppinglog_2leftp_shoppingright.

Higher entropy means there is more unpredictability in the events being measured. For example suppose you have some data about colors like this. P Total yes 9. H -Sum P xi log2 P xi Here H is entropy xi represent data tokens P is probability and log2 is log to the base 2.

Entropy is a method to measure uncertainty. The algorithm calculates the entropy of each feature after every split and as the splitting continues on it selects the best feature and starts splitting according to it. Entropy always lies between 0 to 1. Next we need a metric to measure the reduction of this disorder in our target variableclass given additional information featuresindependent variables about it.

5 2 1. And in general if we let Lk be the entropy of a uniform distribution with k. Entropy can be measured between 0 and 1. This is the degree to which the entropy would change if branch on this attribute.

H16 16 16 16 16 16 H05 05. Logpx. Note log is calculated to base 2. Entropy is a measure of disorder or uncertainty and the goal of machine learning models and Data Scientists in general is to reduce uncertainty.

The entropy of a distribution with finite domain is maximized when all points have equal probability. Lyt 0 ln04 1ln04 0 ln02 092 L y t 0 ln. Lyt 0 ln01 0ln02 1 ln07 036 L y t 0 ln. The entropy of any split can be calculated by this formula.

Higher entropy mean that the events being measured are less predictable. Meanwhile the cross-entropy loss for the second image is. The entropy of a message is defined as the expected amount of information to be transmitted about the random variable X X X defined in the previous section. 100 predictability 0 entropy.

Entropy of left child is 08113 Isizesmall 08113 Entropy of right child is 09544 Isizelarge 09544. Moreover the entropy of solid particle are closely packed is more in comparison to the gas particles are free to move. 04 0 ln. Note that to calculate the log 2 of a number we can do the following procedure.

And this is exactly what Entropy does. Im following the formula at Machine Learning from Text by Charu C. More formally if X X X takes on the states x 1 x 2 x n x_1 x_2 ldots x_n x 1 x 2 x n the entropy is defined as.

Source Image @ towardsdatascience.com

Source Image @ medium.com

Source Image @ www.saedsayad.com

Source Image @ towardsdatascience.com

Source Image @ www.analyticsvidhya.com

Source Image @ towardsdatascience.com

Source Image @ www.youtube.com

Source Image @ towardsdatascience.com

Source Image @ towardsdatascience.com

If you re searching for Entropy Formula Data Science you've reached the ideal location. We ve got 10 graphics about entropy formula data science adding pictures, photos, pictures, wallpapers, and much more. In these page, we also have variety of images available. Such as png, jpg, animated gifs, pic art, logo, blackandwhite, transparent, etc.

If the posting of this web page is beneficial to our suport by spreading article posts of this site to social media accounts as such as Facebook, Instagram and others or may also bookmark this blog page using the title Entropy Is A Measure Of Uncertainty By Sebastian Kwiatkowski Towards Data Science Work with Ctrl + D for laptop or computer devices with Home windows operating-system or Command line + D for computer system devices with operating system from Apple. If you are using a smartphone, you can even use the drawer menu with the browser you use. Be it a Windows, Macintosh personal computer, iOs or Android operating system, you'll be able to download images using the download button.

0 comments:

Post a Comment